Last week we published the story, “Policing the Future,” an examination of how the St. Louis County Police Department — in the wake of the death of Michael Brown and the ensuing protests in Ferguson — is using new software that predicts where crime will happen.

The subject of ‘predictive policing’ has received a lot of press over the last year, and has become a focal point for debates about how police departments can improve their reputations. A lot of those debates made their way, directly or indirectly, into comments submitted by the story’s readers. We collected a handful from various corners of the Internet and have curated them here.

Race and the “feedback loop”

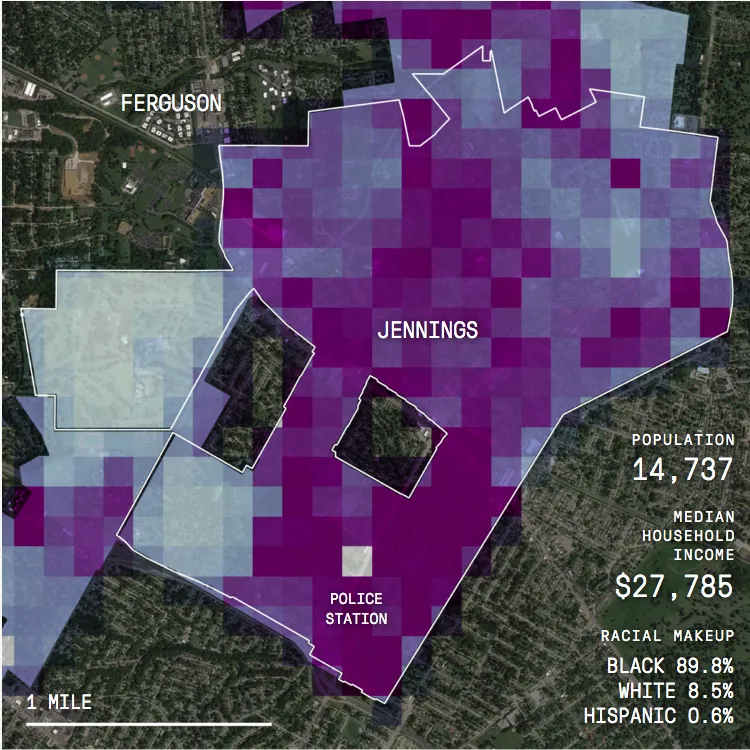

Many readers were concerned that if predictive policing software analyzes arrest records — records they believe reflect disproportionate policing of minority communities — it will perpetuate the over-policing of those communities. “How is this not inherently subject to feedback loops?” asked ‘Ryvar’ on Metafilter. If the “computer predicts — correctly, and without deliberate racism — that more crime will occur in poor neighborhoods (which will correlate with black neighborhoods),” it “leads to more cops patrolling those areas, leads to more opportunistic arrests + confrontations due to distrust between the community and the police…”

It’s a problem that Sgt. Colby Dolly, the police official who brought predictive policing to St. Louis County, has also thought about. During our interview, he explained that he aimed to limit his county’s use of the software to serious felonies — often violent — that produce 911 calls, and not drug arrests. Dolly was acutely aware that some communities felt they were not being served enough by police, rather than over-policed, and this same concern showed up in a few comments. “As one who lived and worked (for 30+ years) in a high crime predominantly black inner city neighborhood,” wrote commenter ‘rmhsinc’ on Metafilter, “the increased presence of police in the high crime area — whether bicycle, car, auto or on foot — was welcomed and sought.”

This idea that black communities have in some cases wanted more interaction with the criminal justice system has cropped up a lot in the past year, from Jill Leovy’s “Ghettoside,” a book about the lack of resources for homicide investigations in Los Angeles, to Michael Javen Fortner’s “Black Silent Majority,” about how black communities a generation ago supported tougher enforcement to protect themselves from the crack epidemic.

Transparency and how there isn’t enough of it

The secretiveness surrounding how crime-prediction software companies are using and analyzing data bothered some readers. A user going by ‘open-source-govt’ wrote on Reddit, “I would have fewer reservations about this if the software in question was open source and could have its code vetted by the various activist communities interested in such things. As it is now it's a black box that spits out information with very little to back it up besides claims in a sales brochure.”

During the reporting, I spoke with residents of Bellingham and San Francisco who complained that their departments were adopting the technology before adequately informing and consulting the community. “We wanted a greater explanation for how this all worked, and we were told it was all proprietary,” Kim Harris, a spokeswoman for Bellingham’s Racial Justice Coalition, told me. “We haven’t been comforted by the process.”

Azavea, the company that produces HunchLab, gave The Marshall Project the data and algorithms they were using in St. Louis. Mark Hansen, a Columbia Journalism School professor who helped report the story, and Marshall Project managing editor Gabriel Dance analyzed that data to produce the graphics that accompanied the article. Other companies have been more guarded about sharing algorithms they consider proprietary. This was the subject of a report by SF Weekly in 2013.

The tension between transparency and proprietary interests has shown up in reporting on other criminal justice tools that aim to predict the future, including my coverage of the Abel Assessment, a test that has been used to predict the risk of reoffending among sex offenders.

What to do instead

In the article, I explained that some police agencies are thinking about how to use predictive software to address the root causes of crimes — rather than simply sending officers to patrol them. But some proponents of predictive policing have pushed back, saying that such an approach goes beyond the mandate of police. Jeffrey Brantingham, a researcher who helped develop PredPol, told Hansen, "I dare you to go into a roll call for police officers beginning their 12-hour shift and say, 'Okay Officer Jones, your job is to solve drug addiction.’ They don't have the tools and resources to do something like that.”

Many readers agree, noting that police haven been taken to task for deeper social and economic problems that are not their responsibility. “To blame this on police is short sighted, petty and lazy,” wrote ‘ePoch2701’ at The Verge. “Where is the blame on the politicians who make the laws that target minorities...Why are fines not relative to income? Where is the blame on the Courts that don’t allow more community service in lieu of fines and jail?”

Another reader, ‘Michael_F,’ wrote that a larger problem — and one that haunts St. Louis — is the fact that mostly white police officers work in predominantly black neighborhoods: “I can’t help but wonder if all the money spent on this software might be more effectively used to enact initiatives to hire more police officers that live in the community they work in and better reflect the cultural dynamic of the people in those communities.”

In response, ‘Ghotiol’ wrote, “You can only hire people that apply for the job, and I can’t imagine that there are many applicants from a place that views the police so negatively.” Numerous police departments around the country have described difficulties recruiting members of minority communities.

Perhaps the most provocative suggestion came from Verge reader ‘rayf_,’ who recommended that software developers shift their focus to predicting which police officers are most likely to be racially prejudiced: “I’d be much more interested in an app that crawls for sentiments from officers in chat rooms, youtube, facebook, forums, etc. that would indicate bias.”